Drive conversions with A/B testing in email marketing. Use best practices, experiment with split campaigns, and improve your strategies for impactful results.

Email Marketing A/B Testing: Maximizing Results through Split Testing

Question

Answer

Compare open and click rates across different segments; if any rates differ significantly, prioritize updating the subject lines, email templates, or both, and then run an A/B test.

Now, let’s take a deep dive into the essentials of A/B testing, exploring how to determine what to test, different email campaign elements and approaches, as well as best practices that can significantly enhance the impact of your email marketing.

What is A/B Testing?

A/B testing, also known as split testing, is a methodical approach used in marketing to compare two versions of a marketing activity (such as an email) to determine which one performs better. Essentially, it involves showing version “A” to one part of your audience and version “B” to another, then analyzing the results to see which version garners a more favorable response. This technique is grounded in the principle of using data-driven decisions rather than assumptions, enabling marketers to make informed changes based on actual user behavior.

A/B testing can be applied to various elements like email subject lines, webpage layouts, call-to-action buttons, and content strategies, making it a versatile tool for enhancing marketing effectiveness.

Benefits of A/B Testing

At its core, A/B testing has three (interlinked) goals in mind:

- Increased engagement

- Increased conversion rates

- Increased ROI

Here are some of the benefits of A/B email tests that help lead to these improvements:

Enhanced Personalization

Personalization is key in effective email marketing, and split testing helps you fine-tune this aspect. By experimenting with different personalized elements like customized subject lines or content, you can determine the level and type of personalization that best appeals to your audience, making your emails feel more relevant. Through A/B testing, your bulk, automated email campaigns can become highly personalized, feeling tailor-made for each recipient. Additionally, this approach helps in timing the emails to coincide with when customers are most responsive and expecting them

Optimized Content

One of the things you care about most when sending content to your prospects or customers is getting their attention. A/B email tests allow you to understand what type of content — whether it’s informative, promotional, or educational — works best for your audience (s). This insight enables you to craft your future emails (post-test) with the content style and tone that is most likely to engage and convert your readers.

Reduced Bounce Rates and Unsubscribes

By sending campaigns that are more aligned with your subscribers' preferences and interests, email split tests can also help in reducing bounce rates and unsubscribe rates. When emails are relevant and engaging, recipients are less likely to disregard them or opt out of your mailing list, fostering a healthier, more engaged subscriber base.

How To Determine What To Test and Improve

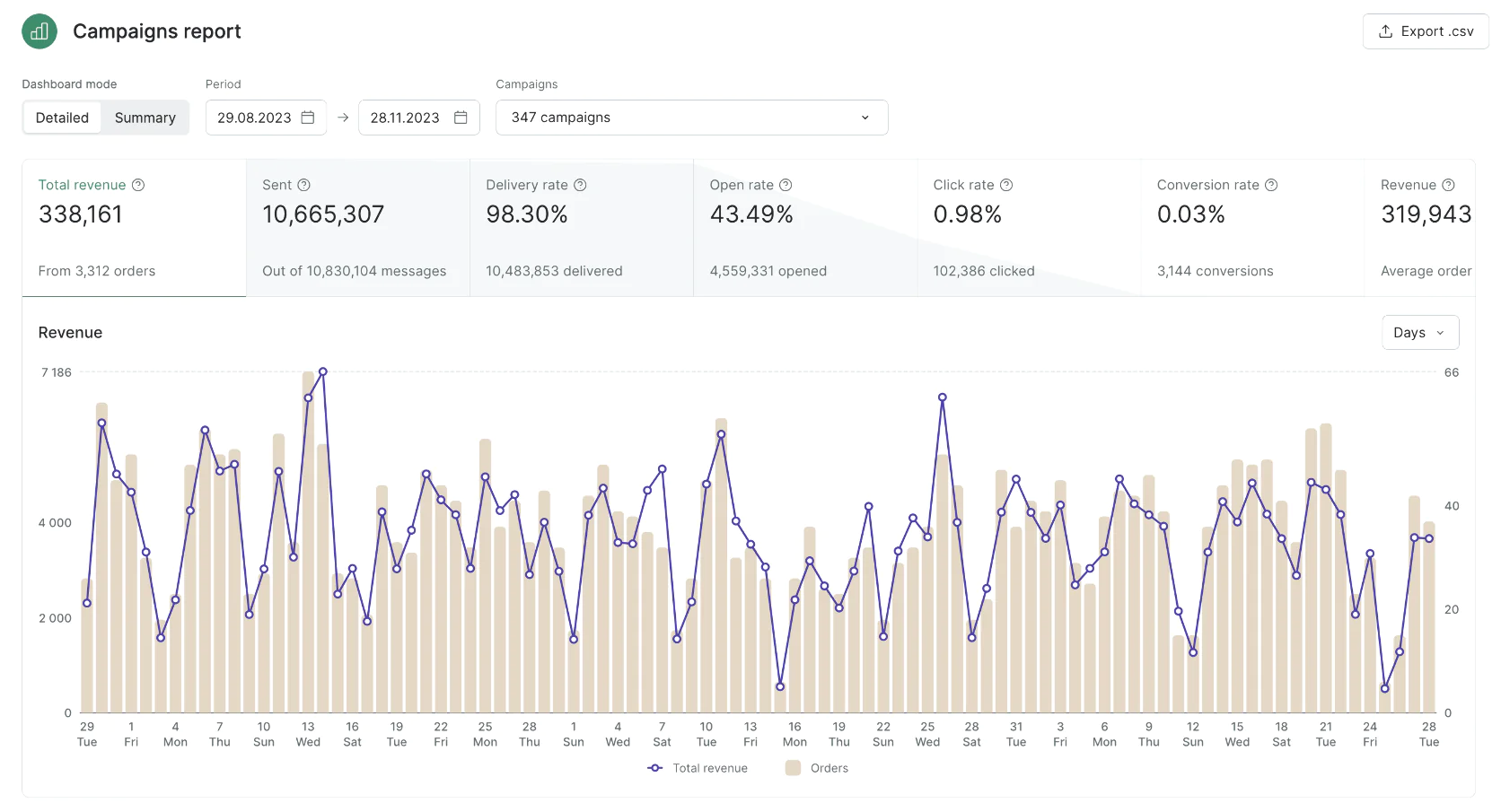

Understanding where to make enhancements in your email campaigns starts with analyzing your strategy. For this, you’ll need to access reports within your current email marketing system. From here onwards, we’ll use Maestra as an example, but you can use these steps as a general reference for other platforms:

1. Open the campaign summary report.

Campaign Summary report within Maestra

This report presents a funnel that includes metrics like Open Rate, Click Rate, and Conversion Rate for various campaigns (abandoned cart, abandoned browse, welcome emails, reactivation). For simplicity, let’s assume that your automated campaigns and segments are configured correctly and your website is functioning without any errors.

To determine which emails need improvement in Open Rates (OR) and Click Rates (CR), compare them across different segments. Segments can include newcomers, active clients, and those at risk of churn. Generally, active clients demonstrate the highest performance metrics, while those at risk show lower numbers. The number of segments and their characteristics can vary based on your business type, but the key here is always to compare metrics within the same segment for accurate analysis.

If the OR and CR significantly differ in certain emails compared to others, consider changing the subject, email template, or both. Here are some possible scenarios to consider:

High Open Rate, Low Click Rate

This indicates that while the subject line is appealing, the email content does not engage the reader. The subject might be misleading or the email template may have issues — such as broken links, excessive call-to-action buttons, or overwhelming text.

Low Open Rate, High Click Rate

A lower open rate compared to the click rate suggests that the subject line aligns well with the content, but it’s not grabbing enough attention. Enhancing the subject line and making it more eye-catching would be the goal here.

Low Open Rate and Low Click Rate

This could indicate an issue with the email’s appeal to the specific segment or a poor combination of subject line and content.

High Open Rate, High Click Rate, and Low Conversion Rate

If the OR and CR are good but the order conversion rate is lower compared to other messages sent to this segment, there may be an issue with links in the campaign or your website’s functionality — this can also be tested.

8 Email Variables You Can Test

Here are some ideas of email campaign components to focus on in your A/B testing strategy.

Subject Line

The subject line is the gateway to your email content, determining whether your campaign gets opened or ignored — testing different subject lines can reveal what grabs your audience’s attention, be it humor, urgency, personalization, or curiosity.

Here are a few tips for crafting effective email subject lines:

- Appeal to recipients’ emotions. Include powerful adjectives, trending words and topics (use Google Trends for this), experiment with synonyms and emojis.

- Personalization. Address the recipient by name to add a personal touch.

- Use the customer’s pain points. Incorporate elements of time sensitivity, intrigue, or a subtle sense of urgency to grab attention.

- Incorporate a question. Design a subject line with a compelling question that piques the recipient’s curiosity and desire for an answer.

- Experiment with different lengths. Some audiences respond better to a shorter subject line, others prefer more detail.

- Emojis. Test to see how different segments respond to emojis. Make sure to use these sparingly — no more than two (ideally one) per subject line

Keep in mind that the subject line should create enough intrigue for the recipient to open the email while also fulfilling the expectations set by this subject line once they read the content.

For instance, baby care brand Libero experimented with different subject lines — offering recipients a more informative and educational subject line vs. one that appealed to their emotions:

Subject line

Open rate

Click rate

Maria, a selection of useful and interesting articles!

14.3%

3.7%

Maria, you’ll love this email!

19.5%

7.3%

As a result of this test, the team determined that this particular segment (parents of 17-month-old babies) responds best to “emotive” email subject lines.

Preview Text

The preview text (also known as the preheader) acts as an extension of your subject line. It offers an additional opportunity to engage your recipient and persuade them to open the email. Test different lengths, clarity, and relevance of the preview text to the subject line. Depending on your audience, you might find that a more descriptive preview increases open rates, or perhaps a more mysterious and intriguing approach sparks curiosity.

Sender Name

The sender’s name can significantly influence the trust and recognition factor of your email. Testing variations like using a personal name (e.g., "James from Company") versus a general company name (e.g., "Company Team") can impact how recipients perceive and interact with your email. Personal names can sometimes make emails feel more personalized, while brand names might leverage brand recognition.

For instance, cosmetics brand, Teana, tested two emails that were identical in content, but sent under different names — one from “Anna from Teana” and the other without a personal name. The former outperformed the non-personalized version across all metrics:

Sender name

Open rate

Click rate

Converstion rate

Anna from Teana

13.67%

1.14%

2.30%

Non-personalized

19.94%

0.96%

0.55%

Results for this test have a 95% reliability score and were collected using the last-click attribution method

Content

The email body is where you deliver your message and engage the reader. Here, you have plenty of choice when it comes to A/B email tests, including:

Copy

- Tone — for instance, informative, formal, or warm and conversational.

- Paragraph lengths — from concise to more detailed content.

- Structure — test different approaches such as bullet points versus paragraphs to enhance readability and clarity.

Design

The design of your email is crucial in making a lasting impression on your audience. Effective design can enhance readability, engagement, and the overall impact of your message. Consider testing various design elements such as:

- Layouts — experiment with single-column versus multi-column layouts. Single-column layouts often offer simplicity and focus, while multi-column can provide more information and visual diversity.

- Color schemes — colors evoke different emotions and can significantly impact how your message is perceived. Test different color palettes to see which best resonates with your audience.

- Images — the types and placement of images can drastically affect engagement. Try testing different image sizes, locations within the email, and types (photos, illustrations, graphics, gifs) to understand what results in more engagement.

- Typography — the choice of fonts and their sizes can influence readability and the tone of your email. Experiment with these to determine what is most effective in conveying your message clearly and engagingly

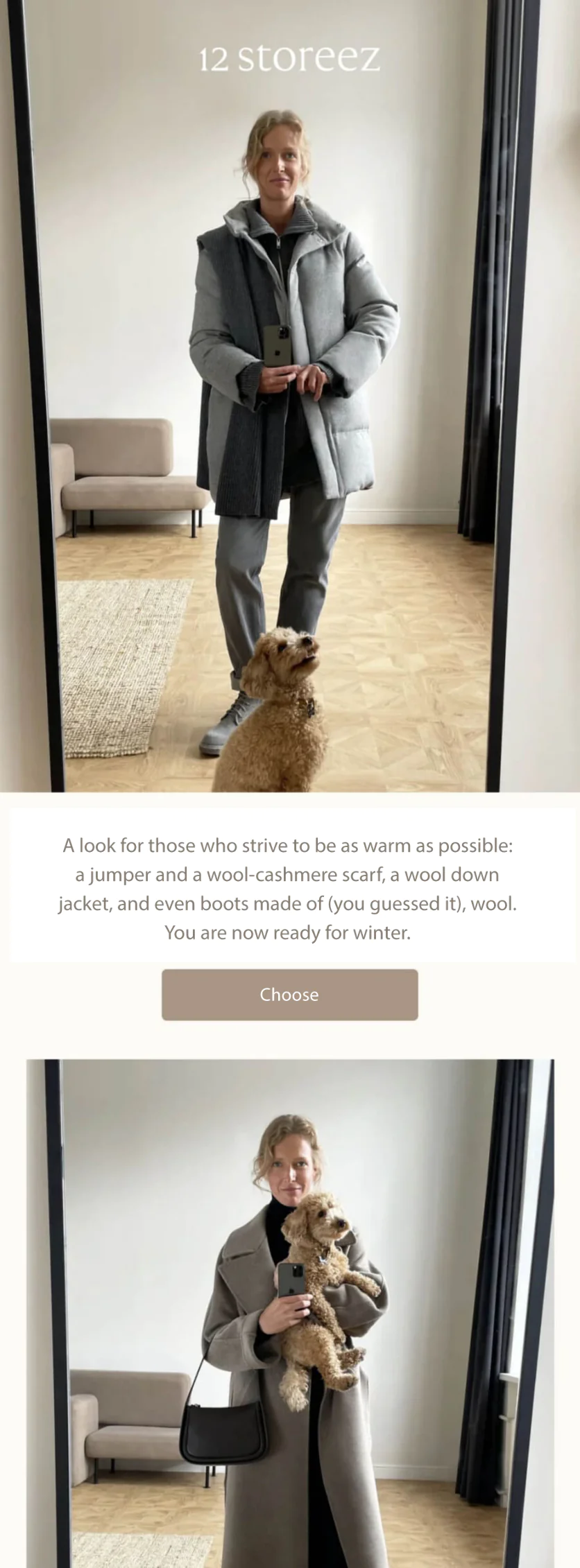

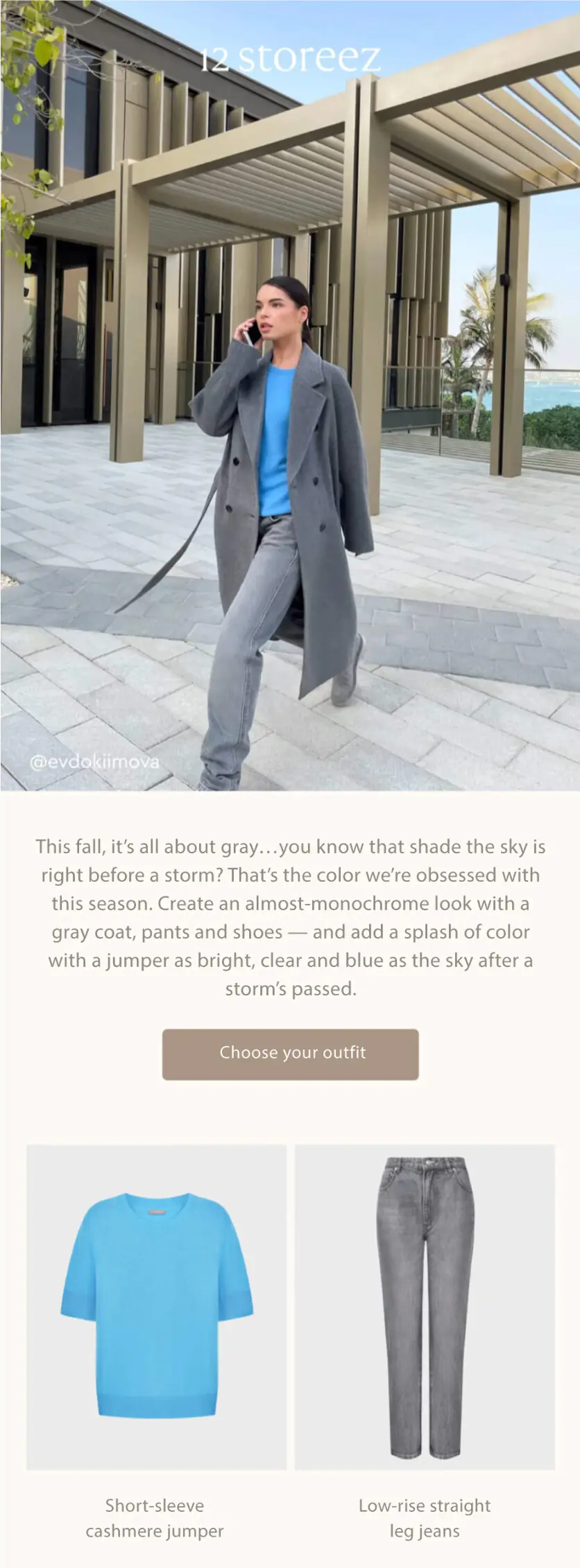

For example, fashion retailer 12 STOREEZ experimented with their email design to determine whether their customers engage better with a longer or shorter email.

Variant A: This email included 5 outfits. The customer needed to click the “Choose” button under the photo to expand the outfit description.

Variant B: This campaign contained one outfit.

This experiment demonstrated almost twice as many clicks for the shorter campaign (1.4% compared to 0.8%), which allowed the fashion brand to adapt future campaigns with this in mind.

Call-to-action (CTA) Buttons

Testing call-to-actions is critical for driving conversions. You can test different aspects of your CTA buttons, including:

- Wording ("Buy Now" vs. "Learn More")

- Design — size, color, and button vs. text link

- Placement in the email

These small variations can significantly impact the click-through rate and overall effectiveness of your email campaign.

Personalized Content Blocks

Personalized content blocks are distinct sections in an email, each serving unique purposes like product showcases or special offers. Consider experimenting with:

- Personalized recommendations. Try different approaches such as recently viewed items, popular items or browsed/popular categories.

- Special offers. Feature exclusive deals, discounts, or promo codes tailored to the subscriber’s interests.

- Product review requests. This can display unreviewed products from the customer’s purchase history.

- Bonus point balance. Experiment with different ways of showcasing the customer’s loyalty points balance and membership status. Test various different approaches to motivate them to earn more points and upgrade their loyalty status

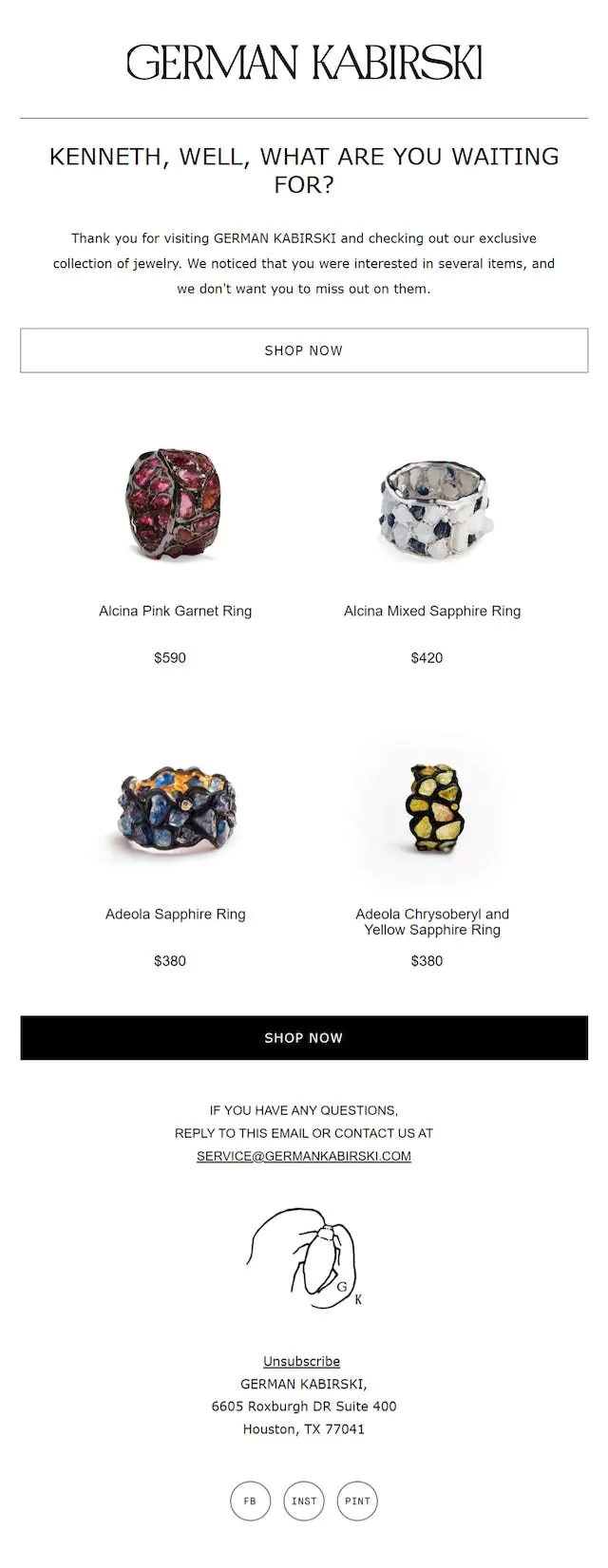

Jewelry brand German Kabirski uses different types of personalized content blocks in their campaigns — one example is a section showcasing the items the customer browsed in their last session:

Personalized email campaign from German Kabirski containing the customer’s browsing history

Sending Time

The timing of your email can affect its open and engagement rates. This can vary greatly depending on the demographics of your audience, their time zone, lifestyle, and even the industry you are in. Test sending emails at different times of the day and on different days of the week to identify the optimal time for your specific audience. Pay attention to how time variations influence open rates and engagement levels across different segments, countries, and age groups within your audience.

According to HubSpot’s research, the most responsive periods for recipients are between 9 AM to 12 PM and 12 PM to 3 PM. Furthermore, the research highlights that Tuesday is the day when audiences are most engaged, with 27% of respondents affirming this.

A/B Testing Best Practices

To harness the full potential of A/B testing in your email marketing strategies, it’s essential to adhere to some fundamental best practices. The following guidelines will not only enhance the validity of your tests but also ensure that the insights you gain are actionable and reliable:

Test One Variable at a Time

Focus on changing only one aspect at a time in your A/B email tests, whether it’s an image, email subject line, or a call-to-action button. This ensures that any differences in performance can be attributed directly to that change.

Ensure Statistical Significance

To trust your test results, they must be statistically significant. This confirms that the results are not just due to chance but are a true reflection of the changes made. Use tools like Maestra’s A/B Test Calculator to help you. This calculator can help you determine the sample size (how many people you’ll need for a reliable result) to test your open rate, click rate, conversion rate, and more. You can also use it for post-test evaluation to understand which variant showed the best results and whether the difference between them can be considered statistically significant.

Use a Control Group

Always have a control group that does not receive the new variation. This group serves as a benchmark, allowing you to compare the performance against the group exposed to the new element.

Account for Segment Preferences

Preferences can vary greatly across different demographics. The more precise your segments, the richer the insights you’ll gather for each unique group — it’s worth spending some time on thorough segmentation before you begin testing.In addition, it’s vital to avoid a one-size-fits-all approach when it comes to extrapolating results — insights from one segment may not apply universally. Customize your strategies for each segment, recognizing their distinct characteristics and responses for more effective and targeted campaigns.

Run Multiple Tests for Consistency

Conducting several tests over time is crucial to validate the consistency of your results. This repeated testing will help solidify your findings.

Test Across Different Email Clients

It’s important to remember that various clients might render campaigns differently. To ensure consistency and accuracy in your A/B email testing, conduct pre-tests by sending your campaign to different email clients. This step allows you to identify and rectify potential display issues or formatting errors before launching your email. By eliminating these variables in advance, you increase the reliability of your test outcomes, ensuring that any differences in performance are due to the content changes rather than technical discrepancies.

Final Thoughts

A/B email testing is an indispensable tool in the modern email marketer’s arsenal, fostering data-driven decision-making. It provides clear insights into what works and what doesn’t, allowing for continuous optimization of your campaigns.

Each email split test is a step toward refining your communication with your audience, making your messages not only more effective and targeted, but also driving more conversions and revenue. Ultimately, A/B testing is an ongoing journey of discovery and adaptation, essential for keeping your email strategies both responsive to your audience’s evolving needs and aligned with the latest advancements in digital marketing.