Discover how SEMrush, a global leader in digital marketing tools, automated email communications with Maestra to enhance user engagement and streamline data transfer for more effective email campaigns.

The Story of SEMrush: How We Automated Communications for a Transnational Marketing Service

SEMrush is an international company with over 650 employees from 4 countries, and 6 offices on two continents. It’s a global kit for internet marketing that includes tools for SEO, social media, PPC campaign management, and other marketing opportunities. Among their clients are eBay, Amazon, Skyscanner, Booking.com and others.

This case stands out: it’s written by our client as a first-person story. So, we’re passing things turning over to Anastasia Armeeva, ex-head of email marketing at SEMrush.

Some notes about the author

To start, I would like to tell you a little about us. We are the email marketing team at SEMrush. We are one of the leading companies offering automation solutions for online visibility. There are thousands of new users in our database daily and we have over 4 million clients from different countries in total. There are 7 people in our team and we send out newsletters in 10 languages, 3 of which we speak fluently.

Our company’s mission is to make online marketing better. SEMrush is aimed at providing marketers with the tools to make their work better through the implementation of new solutions and experiments. Our team shares the same principles in our own work.

Cross functionality is one of the agreed priorities in our company because this way we can perform the widest specter of tasks during the production cycle of our internal product. Many of my teammates are Excel masters, some can write SQL-queries and even simple scripts in Python, some know how to use Photoshop. And, of course, we need to constantly implement complex logic to send various campaigns to our user base. That’s why we use Maestra

How we chose the platform

I feel that I need to tell you something about myself in advance, as it would be unethical not to mention it: prior to SEMrush, I worked at Maestra.

But I wasn’t ready to deal with integrations for the sake of integrations at the expense of my new team’s efficiency. Moreover, it’s embarrassing for any normal person to drag former colleagues to work for filling the role of a vendor.

Ultimately, we shifted to Maestra because our previous email marketing provider (I don’t like to point fingers) didn’t have enough functionality to implement even 30% of our ambitious marketing plans.

I dragged out the decision for quite some time trying to find arguments for maintaining the status quo, but I just couldn’t find any. I still had some doubts, even on the last day. We were very excited about the prospect of new technical opportunities, but at the same time, it was a leap of faith for my team. My colleague compared it to high-school graduation. (In the picture below, we’re celebrating our transition to Maestra):

Jumping a bit forward, I have to say that over the course of this year, we have had no regrets about our decision at all.

DIY personalized communications

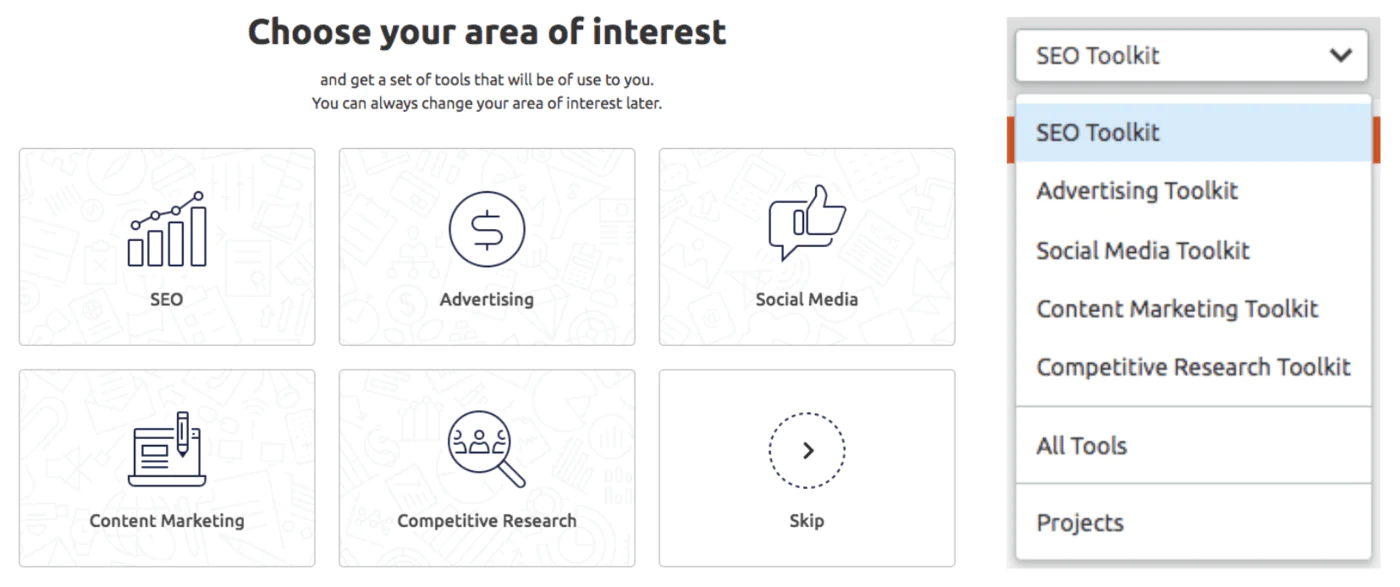

SEMrush is a platform for digital marketers of all stripes — from SEO to SMM managers. Thus, when it came to sending the first of our welcome emails, we needed to determine to which of our main product features a specific user would be most interested in.

We always had data on clients’ activity on different tools at hand, but the data was not suitable for this purpose. First of all, part of them are aggregated daily to fit the conceptual framework of our analytics team. Secondly, many users don’t carry out corresponding actions during their first session at all. Bearing all of this in mind, we had to decide what to include in the first email that is sent within an hour after a user signs up.

Moreover, prior to Maestra, transferring any extra data required the help of a developer. Even integrating a front-end tracker for the website had to be carried out by a dev (not to mention that our development team is always suspicious about the very fact of integrating third-party scripts into website code out of security considerations). And of course, transferring anything from the back end is simply out of the question.

Thanks to our Maestra integration, our team can set up a data transfer through GTM from the website front end without involving anyone else.

For example, to determine the areas of interest of a user, we utilize data regarding events from our website’s landing page and the tool selectd in the side menu of our product’s interface:

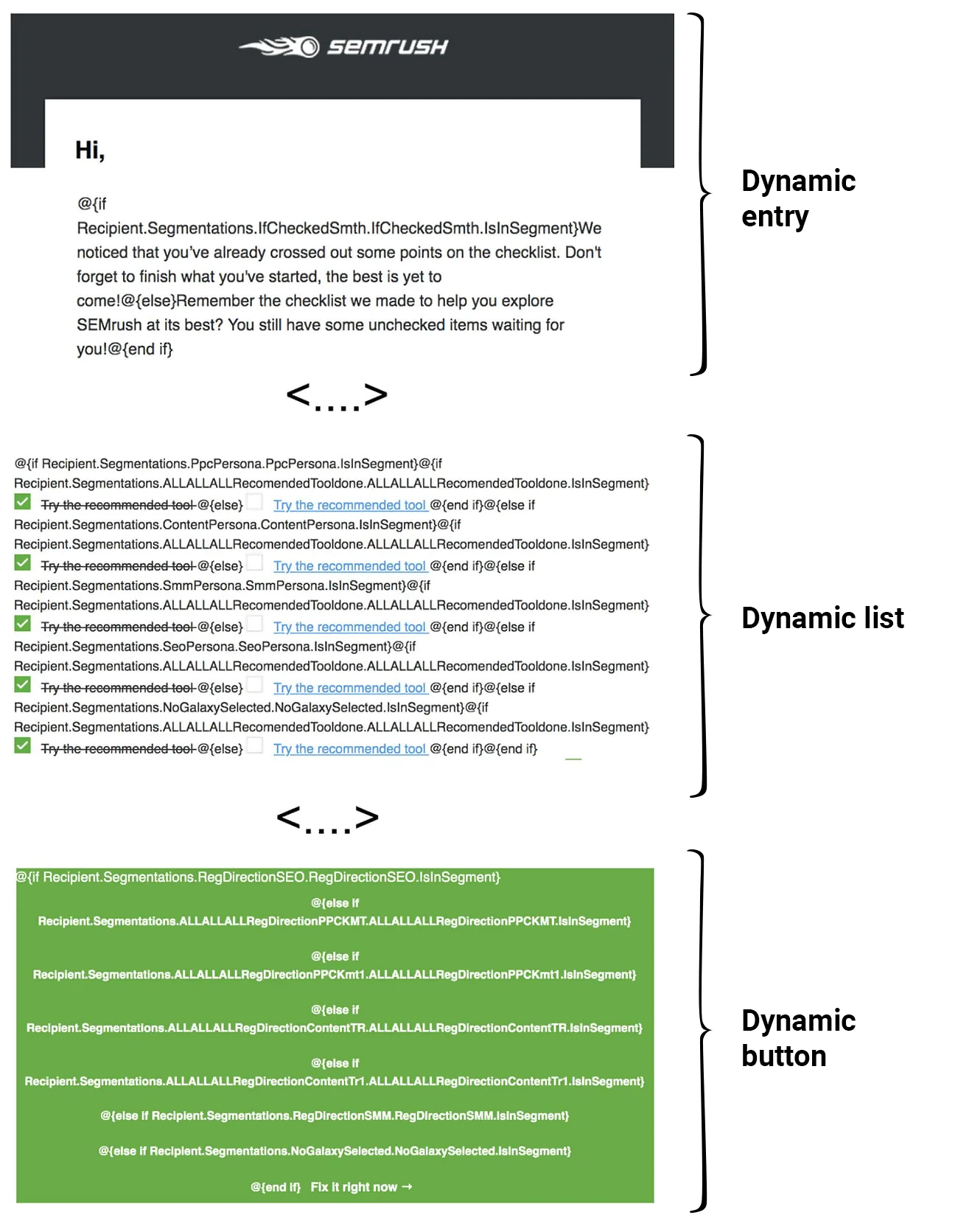

Thus, we know the areas of professional interest of most of our users when sending the first email. The emails look almost identical, but the links within lead to different articles, tools, and webinars — which is all possible thanks to the template engine’s functionality.

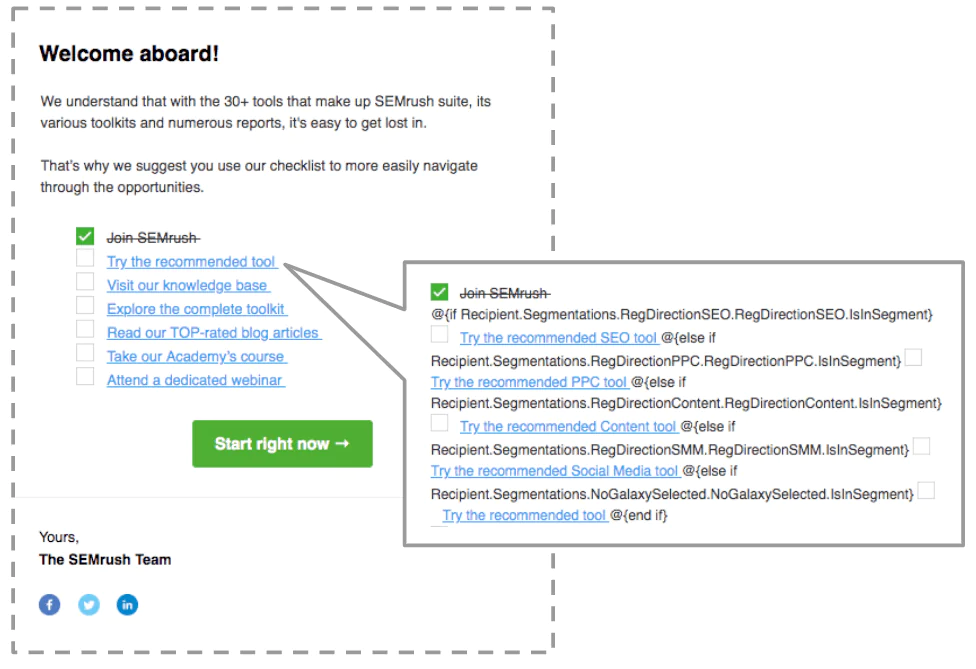

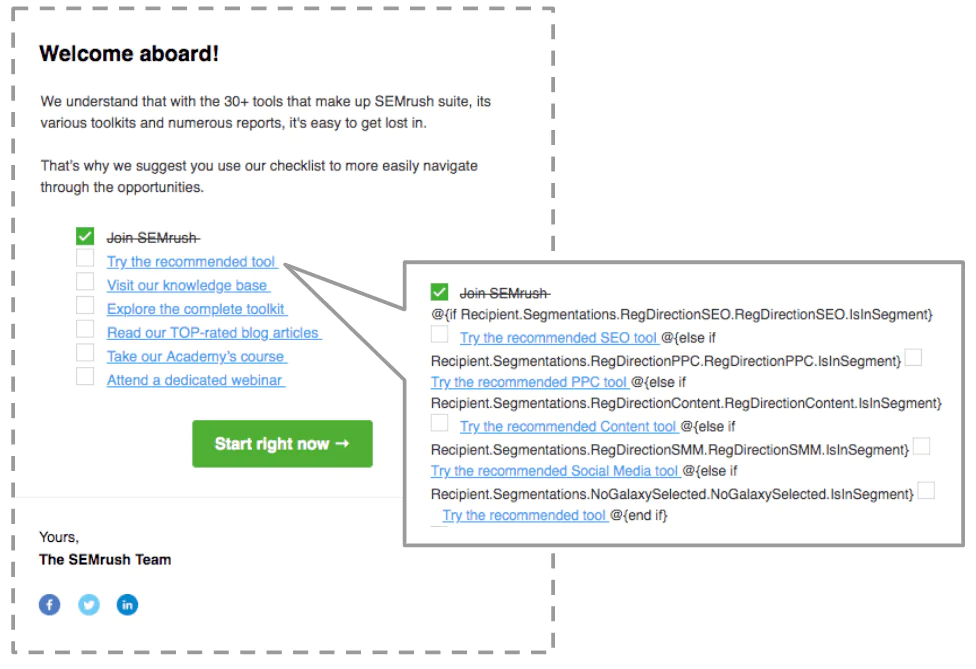

Each link in the email has a branched condition system, depending on which segment a client belongs to

Within a few days we can already see a user’s progress in learning how to use the product:

What the email looks like for a user

And here’s what our marketer sees. It’s possible to customize the content displayed in the template engine depending on the segment to which the user belongs

“We are constantly running experiments, testing everything and working with data as we deal with a SaaS product in a transnational company. And unlike most western CRM and email services, Maestra is a unique tool, outdoing its competitors in many aspects, supporting diverse integrations from front end via GTM to back end with a database.

We can customize Maestra depending on what the business needs and that enables our email marketing team to reach company goals faster than our rivals do. And, perhaps, what’s most important, is that the Maestra team provide an account manager and support team that are always genuinely interested in helping us bring our ideas to fruition.”

A few words on the importance of a control group

Besides being an email marketing team lead, I’m also an owner of a cross-team and cross channel Customer Journey management project. Keeping that in mind, it’s crucial for me not only to find effective scenarios for the framework of my channel but also to gain universal knowledge from our experiments, which could be to some point extrapolated into reality beyond specific letters. To illustrate this point, I want to give you a fresh example.

In our product, there is a tool called MyReports. It’s an assistive tool that allows us to merge reports from the other tools, visualize and export them as beautifully designed documents. We know that the share of users with a paid subscription is significantly higher for MyReport compared to those who never used the tool. At the same time, it’s difficult to pinpoint the exact connection between cause and effect: is it the tool stimulating that’s stimulating purchases or does it just draw attention of users who are already inclined to purchase it because of other factors. For example, it seems like the tool should be interesting for agencies, but at the same time they would want to have several projects in Position Tracking, which is possible only for paid subscribers.

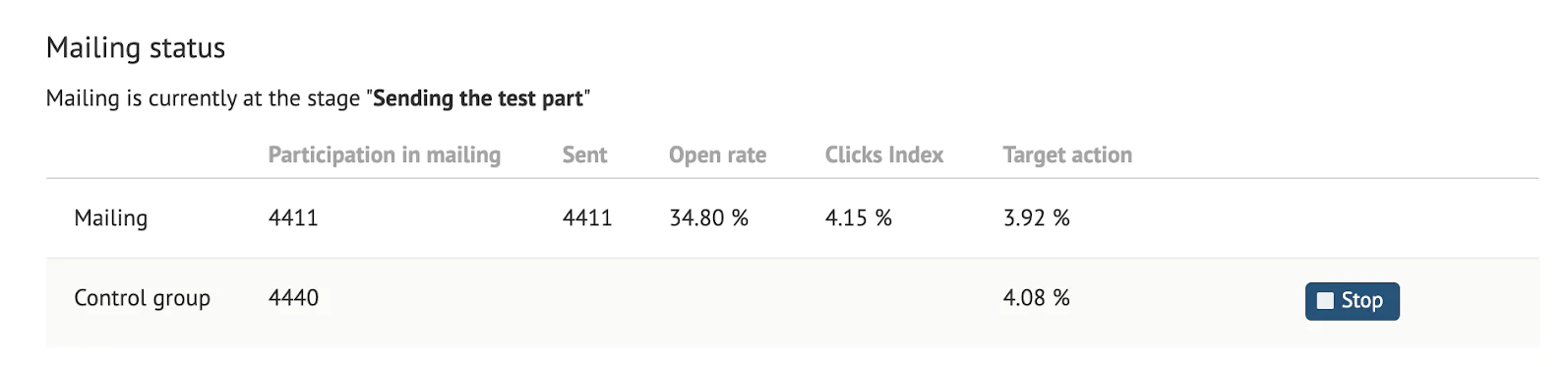

To check whether MyReports contributes to the purchasing decision, we launched triggered campaigns with a control group for users that were using one of the two biggest tools integrated with MyReports.

In the email template, we focused on interactive visualizations and kept boring texts to a minimum.

As a result of this test, we compared the first payments and actually discovered a statistically significant difference between the control and experimental group with a confidence level of 97%. The difference was odd — we saw a 27% increase in the conversion rate. But what was even more interesting is that in this case, 75% of those who participated in the experiment didn’t even open the email. That means that this tool played an even bigger role in pushing towards a purchase decision than the data shown by the email channel.

This insight was important not only to us, but for the product owner, the product marketer of our emerging Monitoring &Reporting direction as well as for other marketing channels. To be more specific, we managed to acquire these results prior to launching the remarketing campaign, and they came in handy. Due to the fact that specifics of forming small audiences in paid traffic does not always allow for the designation of a control group, and the channel costs money.

The first test… flopped

When we tested the campaigns with a control group the first time, we failed. But I think it’s important to shed light on the case, because if we’d launched the test without a control group from the start, we’d still believe that this triggered campaign was effective.

There is a group of UX elements on our website aimed at selling a paid freemium subscription to our users. Buttons in the right corner of the screenshot below lead to the pricing and the payment page. Our plan was to consider these events as a sign of higher interest in the features available in the paid subscriptions.

As usual, we took our time to make a super dynamic template with millions of cycles inside and three external conditions. The button in the email leads to a detailed description of limits for different types of subscriptions. Then, if the client still hadn’t purchased a subscription, we sent them another email in two days with a 15% discount for the first month.

We understood that there was a high, almost 100% risk that we would lose a share of organic purchases due to sending out such a large number of emails with discounts. So, in light of this, we launched a trigger campaign with a control group to assess the usefulness of these automated emails in an objective manner.

It appeared that, despite all of our technical tricks, this email campaign did not change anything in terms of user behavior:

This (naturally) upset us at first. Then we analyzed the campaign once again and concluded that the result is rather explainable. As a matter of fact, the following hypothesis came to light: users who reached that button were not only more confident, but also understood better what exactly it was that they wanted. In this sense, to influence the decision of such users is even more difficult, because there is less cognitive uncertainty to be filled artificially. As a result, the range of marketing hooks relevant for each of these users is smaller and more individual than on average.

The second campaign provided lots more value

In such a situation, it seems like more customization and intensity in direct channel communications for more effective operations with clients. For example, during a conversation, an experienced salesperson can better identify the needs of a potential client and thus explain the value of a technically complex product. We decided to automate the first email of regional managers with such users.

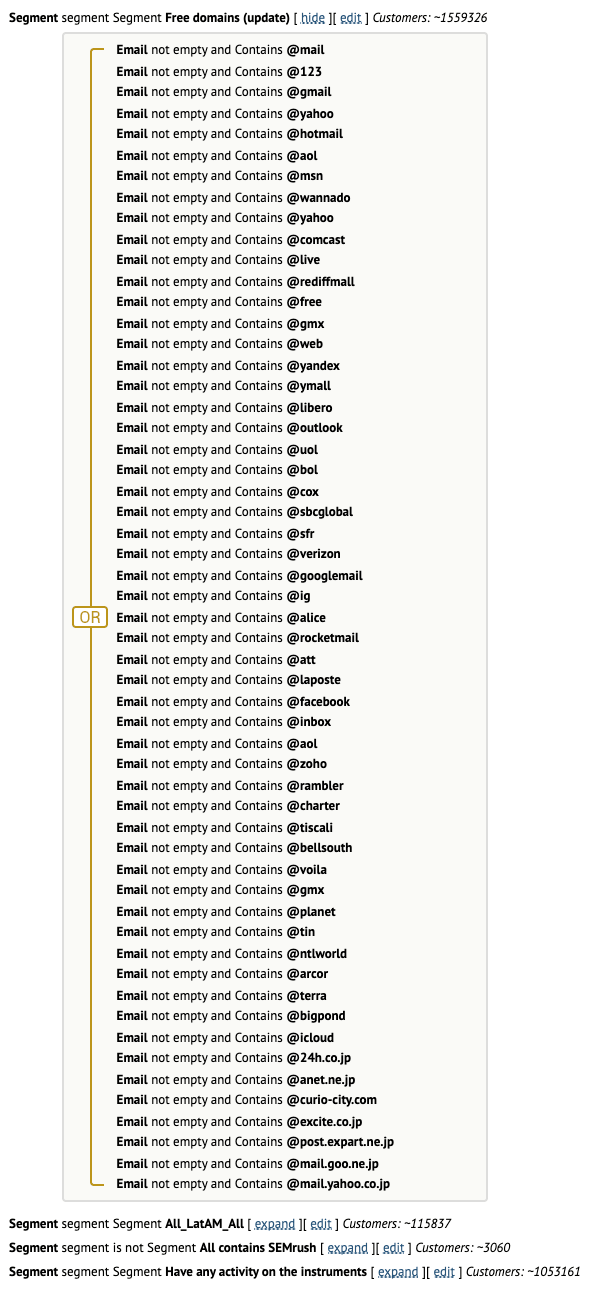

We tested the first pilot automation in Latin American countries. It’s known that the conversion rate for a small circle of users with corporate email addresses is many times higher than for others. That’s why the regional manager manually sent personal emails to users with corporate email addresses while only answering incoming free user queries, thereby optimizing resources.

Since the triggered campaign doesn’t require human intervention after being set up, we decided to try automating bulk campaigns for the non-corporate segment that usually stayed out of our proactive communications.

We selected the most popular Latin American domains and checked if the address belongs to any of them prior to sending a letter.

Settings for the Latin American segment of users

That is what our campaign for visitors of the pricing page looked like. We sent this a few days after the standard welcome communication ended.

The letter was sent from a virtual mailbox that coincided with the working address of our sales manager. This way, answers would land directly in a designated folder in his inbox.

The results from Latin America were close to zero, but the main purpose was to test the technical aspects and see if it would suit the sales departments business processes. It’s known that sales culture varies from country to country a lot, which is why we are still testing these ideas in other regions regardless of the outcome in Latin America. We make adjustments according to our sales department’s demands — for example, in Japan where SEMrush operates through a reseller.

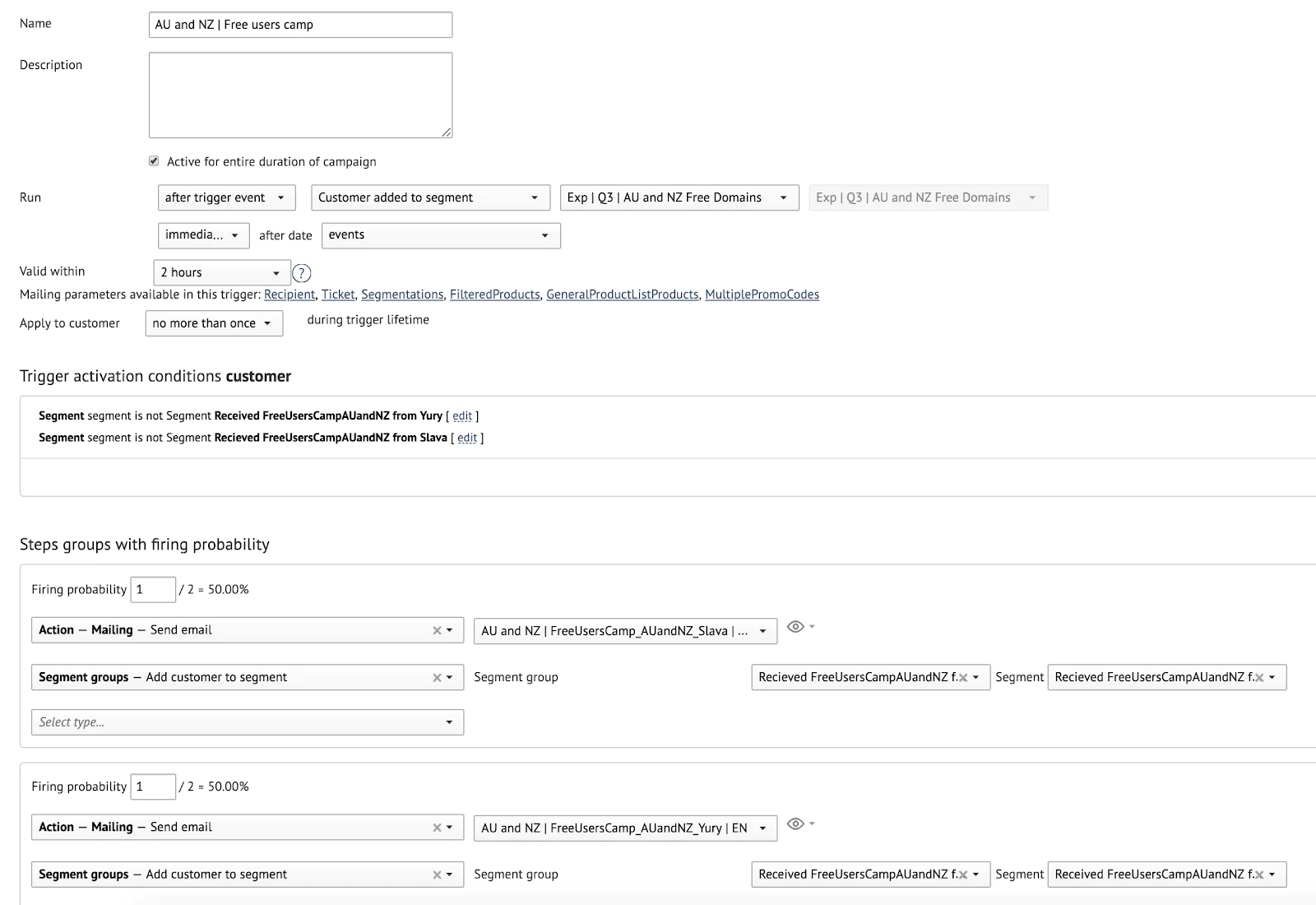

As we may have several sales managers per region it’s important for us to distribute the flow of sent emails randomly so that replies will be distributed evenly among the managers. This is how we set this up in Maestra:

The trigger randomly distributes emails into a few segments

It’s too soon to draw a final conclusion on this campaign’s success, but for better or for worse, we couldn’t move on without experiments.

“Maestra is a service you can be proud of and should be recommended to others.

We came to that conclusion when we set up our first triggered newsletter. A year later, we have more than 150 triggered scenarios and run approximately 200 monthly mailings with a team of only 5 people.

It may sound banal, but Maestra provides opportunities that are limited only by a marketer’s imagination. Providing plenty of untypical mechanics, ranging from segmentation settings to integrations with various services in its arsenal, we never experienced rejection from their support team either when carrying out our work.

Having had the chance to compare Maestra with other solutions, I can tell you for sure: SEMrush definitely outdoes its competitors in terms of many characteristics, especially in terms of functionality and money”.

A lot of suffering is bound to bring some results

We have one test that we ran into 5 iterations before finally getting our piece of desired information: in this very situation, it’s better to send something than not sending anything at all.

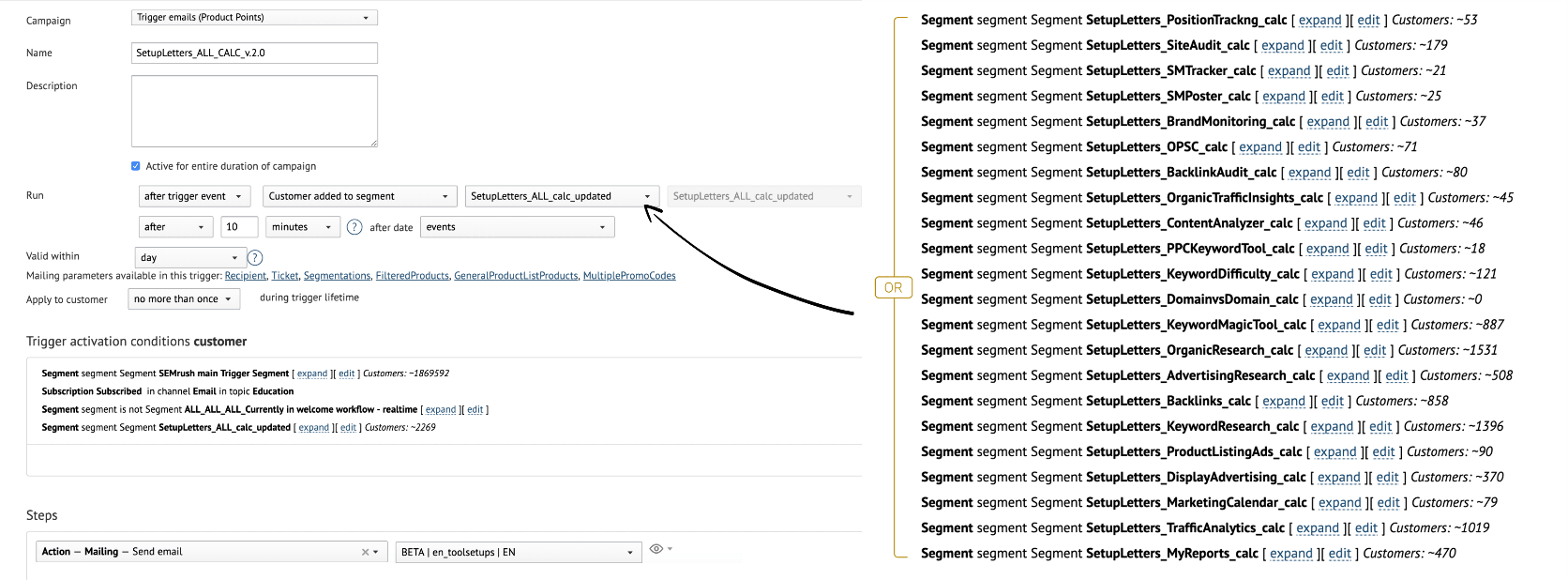

From the point of view of the business, the hypothesis was very simple. We have a very complex and diverse product. Given this, it often looks like if a user had a one-time activity episode with the X tool that has never repeated within Y amount of time, then it’s quite likely that the user simply didn’t understand how it works and what its purpose is.

As we saw in the example above, aggressive and quick communications along the lines of “no time to explain, just take this discount” aren’t effective enough, so we decided to do some honest lead nurturing and offer our clients educational materials for tools that seemed to spark an interest, at least once. Of course, we weren’t completely selfless, for we hoped that ultimately this would impact the quantity of paid subscriptions.

But in practice, everything ended up being much more complex…

Initially, it was clear that sending an email for each action and every tool was not possible, because then, a user would receive several very similar emails over one day. At the same time, limiting the interval between different company campaings lead to forming a group of tools that in such a scheme, systematically overrule the other tools: first, there was no theoretical base to assume that the first tool used within the first session is more important to that specific user than the second one, secondly, the conversion to payment rate for such tools is frequently lower as opposed to the rest. Accordingly, there must be just one trigger.

Secondly, as per the original model of your humble servant, this trigger must be reusable, but at the same time, carry protection from a double sending if the same block.

Let’s throw in a bunch of tiny nuances too like there are different Y periods of time for different tools, and now, the trigger from hell is at your service:

To be exact, it’s the third version. Due to the author’s unreasonable stubbornness (for example, ungrounded demand to have a reusable email), we couldn’t use the standard functionality of the Maestra control group at the template level. We had to jump to temporary solutions consisting of random triggers. It was not possible to follow a correct design for the experiment on our first try.

Ultimately, we gave up the idea of reusability after our Maestra manager had been admonishing us for a while. To be honest, we should have done so earlier. Please, don’t be like u Test your MVP with the help of standard features and only then try to reinvent the wheel if you absolutely must it.

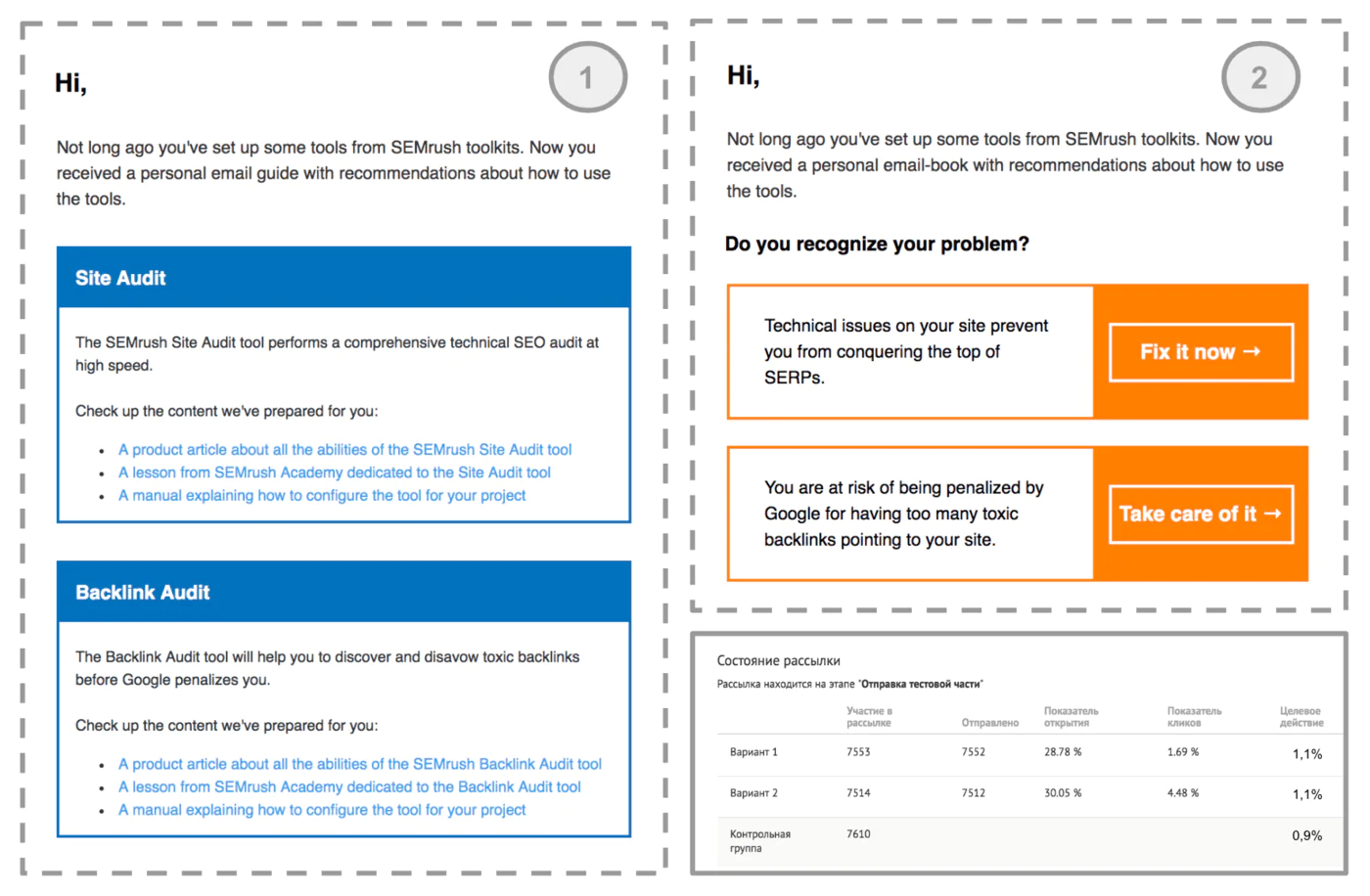

During the testing of our last version, we suddenly came to realize that we are tripping over our own logic and having compiled a very doubtful template, with strange text and design. This iteration gave us a significant difference between the experimental and control groups, in support of the experimental group. But then we discovered an issue that could impact our results. In relation to that fact, in our final iteration, we still use a control group and 2 versions of the text/design, old/boring and new/provocative.

In the end, if you allow yourself some freedom in how you interpret the data and sum up other versions of the mailing, it’s clear that it’s better to send dazed and confused clients some educational materials, as opposed to not doing that at all. Based on this, we switched off the control group and continued with the version testing.

In the end, we discovered that there despite significant differences in terms of clicks, there is no difference in payment. In other words, the new provocative version is just a clickbait really. But, we left it anyway, since it’s not worse in target metric terms, and we like it more.

But sometimes it’s enough to simply…

Chasing universal conclusions, we sometimes tend to play with complex automation too much and forget about the Pareto principle. Which states that roughly 80% of the results come from 20% of the efforts.

To put it in context, in the case of email marketing, instead of building rockets across hundreds of different segments, sometimes it makes sense to just test the subjects. Although that’s an obvious statement, I have to admit that we often act like it doesn’t exist, and comes to mind sporadically, when we witness its random side effects.

For example, when we tested a template (design+text) for one of our automated mailings which we mentioned above, we found out that there was an open rate gap forming for test versions with the same letter subject as the sample size grew (it’s worth noting that the intro paragraph of the letters is the same).

And then the casket opened: in one of the cases, and at the very end of the Gmail preheader a “Do you recognize your problem?” subheading appears. And that is likely what’s pushing especially concerned users (those who we are first and foremost interested in within the confines of this campaign) to open letters better. We saw a similar effect with a welcome chain when we tested the content of emails and the phrase “Welcome aboard!”, accidentally going into the preheader improved the open rate by over 3%.

Lesson? You need to pay attention to these kinds of effects, as they influence the end result of the mechanics and you should never neglect testing subjects. In most cases, testing subjects isn’t enough to draw some general conceptual conclusions, but the good news is that we don’t need to know the reason for success when testing continuously triggered mailings. It’s enough to simply improve the indicators and continue to use the optimal version on a regular basis.

From particulars to universals

Nonetheless, enormous amounts of data sometimes show consistent general patterns. Their effects are clear enough to notice, even bearing in mind all of the mentioned peculiarities. Maestra filters and reports really help in search of such insights. It may well be the most challenging part of the functionality for comprehension, but it’s my deeply love part of it. To show you the profits of conquering these intimidating jungles, I’ll share with you a story from our daily lives.

As was already mentioned, we do mailings in several languages. Five big European countries have their own localized versions for communicating in their native languages, but our main market is North America. Additionally, the English speaking segment also includes a big chunk of the base presented by users from various countries of Asia.

Regional teams localize the biggest news hooks for the product, but localizing everything isn’t feasible with respect to the outcome and resource consumption. At the same time, it seems like, with no high pressure for regional bases, it would be unreasonable to deprive regional users of specific information about the product.

Led by this idea, we sometimes send field-specific emails in English to specific segments. We pick them based on various behavior related to the product, never minding the locale. For example, the Internal Link Building study by active users of three thematically similar SEMrush tools Site Audit, Backlink Audit, and Backlinks regardless of their country. Moreover, if there is a field-specific product email and a mass localized mailing planned for the same day, we send out the first one based on priority and exclude the user from the second one. We consider that in this way, we maximize the probability of a user receiving content relevant to him.

But some of the regional marketing representatives don’t agree with this approach. They argue that regional users don’t speak English well enough and that this doesn’t fit with the brand character. We decided to shed some light on the matter using numbers.

The plan of action was such:

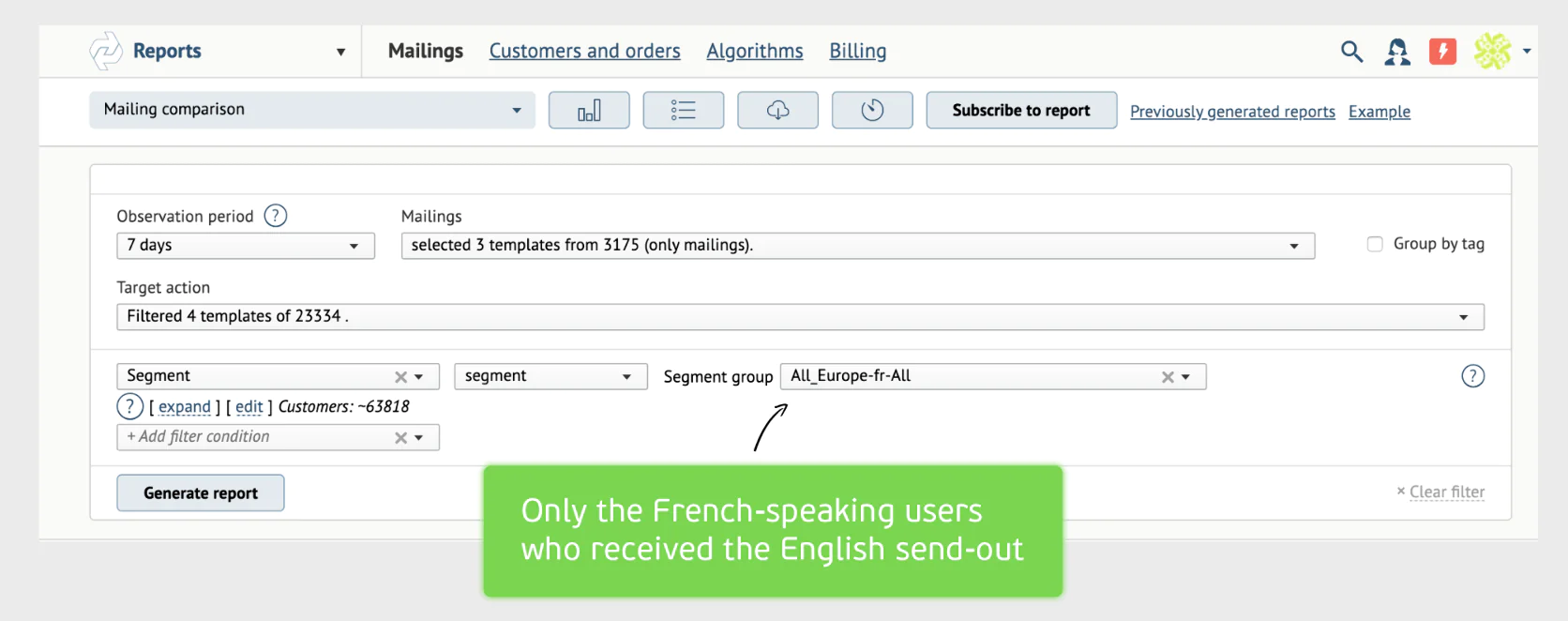

- First, on our internal mailing calendar, we chose send-outs aimed for mixed segments.

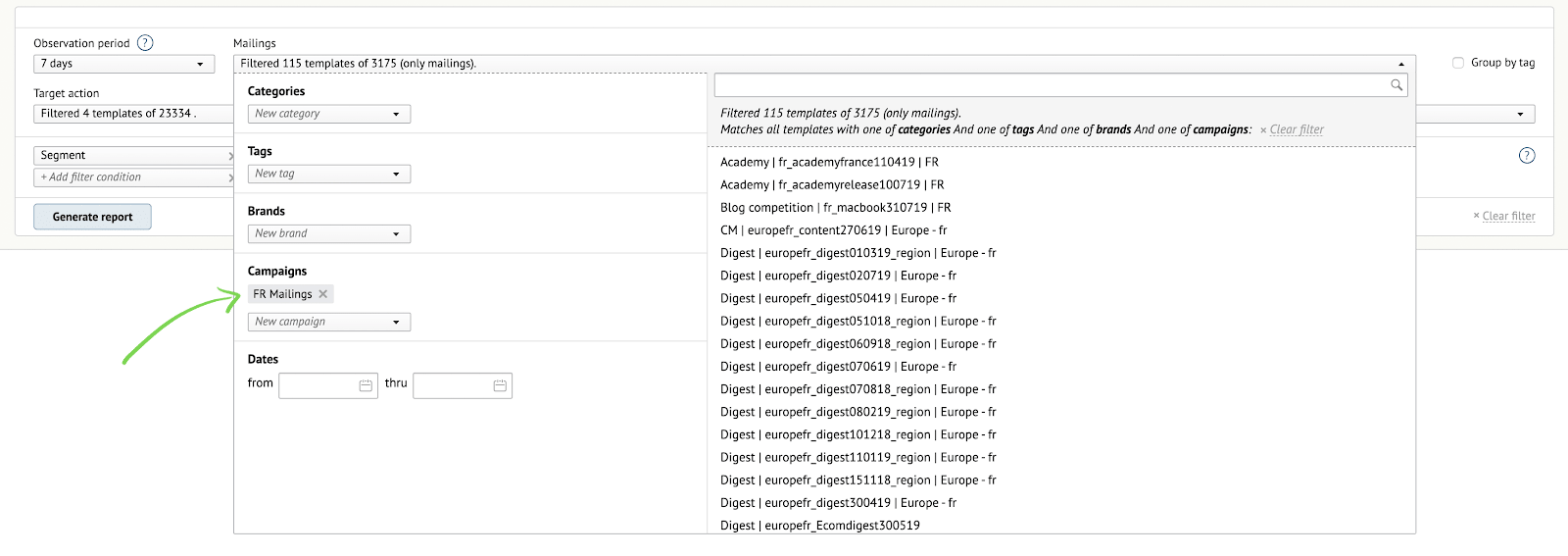

- Then we picked the mailing of interest in the “Send-out comparison” report and used additional filters to form a report only for users that received them under the English campaign but are actually part of the French/Portuguese/Spanish-speaking segment and exported the report in CSV.

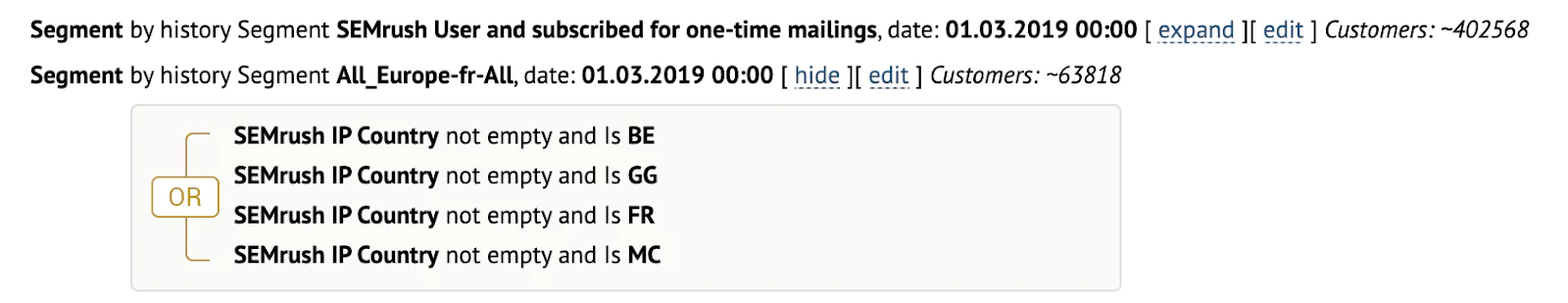

- In order to understand if users react better or worse to such segmented mailings in English, as opposed to mass ones, but in their native language, we wanted to calculate the average across single, unassociated mailings in each region. To do so, we filtered all mailings in the “Single FR mailings” campaign from the date on which we had a change in the unsub due to inactivity criteria (analogous to other regions):

- With the help of the filters, we restored the size of the base for each region at the time of sending various mailings (taking into account for subscription status, exclusions due to inactivity in the channel, exclusion from a one-time communications during the welcome period and so on.

- In the end, thanks to the filters and exported data we got this file that contains the following data for each region:

- Mailing, date, template link.

- How many were sent out in absolute terms.

- What % of the overall regional database it was at that point in time.

- Opens, clicks, and unsubscriptions (in absolute numbers + %).

- The difference between the open rate, click rate, and opt-out rate for a specific product mailing for the English version and the average figure for corresponding mass localized mailings.

- We also checked if the difference is significant for each indicator, in terms of sum amounts for all mailings in these two categories for the period of interest.

- Based on this data, we can draw two important observations.

First of all, almost all mailings, made based on the product behavior segmentation, have higher indicators than on average for the region. If we compare the sum amount of opens, clicks, unsubs for two mailing categories, keeping in account the average send volume, then it’s clear that the differences are either in favor of product mailings or are not significant. There is not one region, not for any indicator, that if averaged in any way, where product mailings don’t lose to mass localized ones.

This supports our hypothesis that segmentation based on product behavior is stronger than location-based segmentation. Accordingly, if a user meets both criteria of mailings from these categories on the same day, it’s justifiable to choose the first one. It’s likely that segmentation based on both criteria at the same time would be even more effective, but minding limited resources and the size of the regional base it’s not profitable.

Secondly, the share of regional clients who receive such sector-specific mailings is minimal, it can be measured in hundreds. Which is why in conditions where we guarantee the careful exclusion of these users from parallel mailings, there’s no big reason for polemic.

Errare humanum est

That’s right. Even in a world full of automation, there is always room for a human factor.

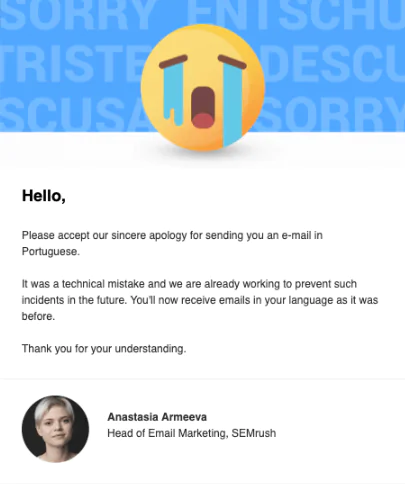

Once upon a time, we sent a letter with a recorded Portuguese webinar to several thousand users from other countries. They didn’t even know this webinar existed nor did they take part in it.

And as you know, once you click send, you can’t undo it. Meanwhile sending an apology letter to everyone is overly courteous. Which is why we sent that letter to users that already opened the previous one. Which then led us to set up a trigger that reacts to new opens.

It turned out to be very cute:

All of these actions — layout creation, a one-off mailing or setting up a trigger, took us around an hour.

Users really appreciated our humanity and responsiveness. During the first day, I got 176 replies, 112 of them were positive, 59 were neutral, and only 5 were negative.

I can’t resist sharing some of the most memorable ones with you:

errare humanum est: -)

Great job emailing immediately after it happened. You’re fucking on it! Keep up the great work.

Well done! Quick to identify a problem and quick to react. Clap, clap, clap 😀

DEAREST ANASTASIA,

THE FACE BELOW IS SOO ADORABLE! PLEASE DO NOT CRY! PLEASE SMILE!

THE FACE BELOW IS SOO ADORABLE! PLEASE DO NOT CRY! PLEASE SMILE!

Too cute of an email to be mad at

Forgiven! Just keep sending me other great stuff!

This episode provoked a very concentrated splurge of dopamine in our team. Because you could really feel that on there or real people on the other side, that also value human contact made just for them. I believe that email marketers need to never forget about that.